Usability testing isn't just about watching people click around on a screen. It’s a structured process where you plan specific tasks, observe real users interacting with your platform, and report the findings to make things better. It is, without a doubt, the most direct way to find out what’s causing friction and to confirm your digital experience actually works for the people it was built for.

Why Usability Testing Is a Business Imperative

Think of usability testing less as another box to check on a project plan and more as a critical investment in your Digital Experience Platform (DXP). When you're working with powerful platforms like Sitecore or SharePoint, skipping this step is a huge business risk.

You're risking low user adoption, a terrible return on a significant platform investment, and even brand damage from users getting stuck in frustrating journeys. This guide isn't about textbook definitions; it's about the real-world business impact.

We're focused on finding real opportunities to boost engagement, make critical workflows smoother, and drive results you can actually measure. It’s the difference between launching a platform that just works and one that truly excels. A good testing process can transform a complex DXP from a clunky tool into a powerful, intuitive asset.

The Core Process at a Glance

For a platform like Sitecore, where personalization and complex user journeys are everything, you need a clear framework. The same goes for a SharePoint intranet, where the entire goal is to make employees more efficient.

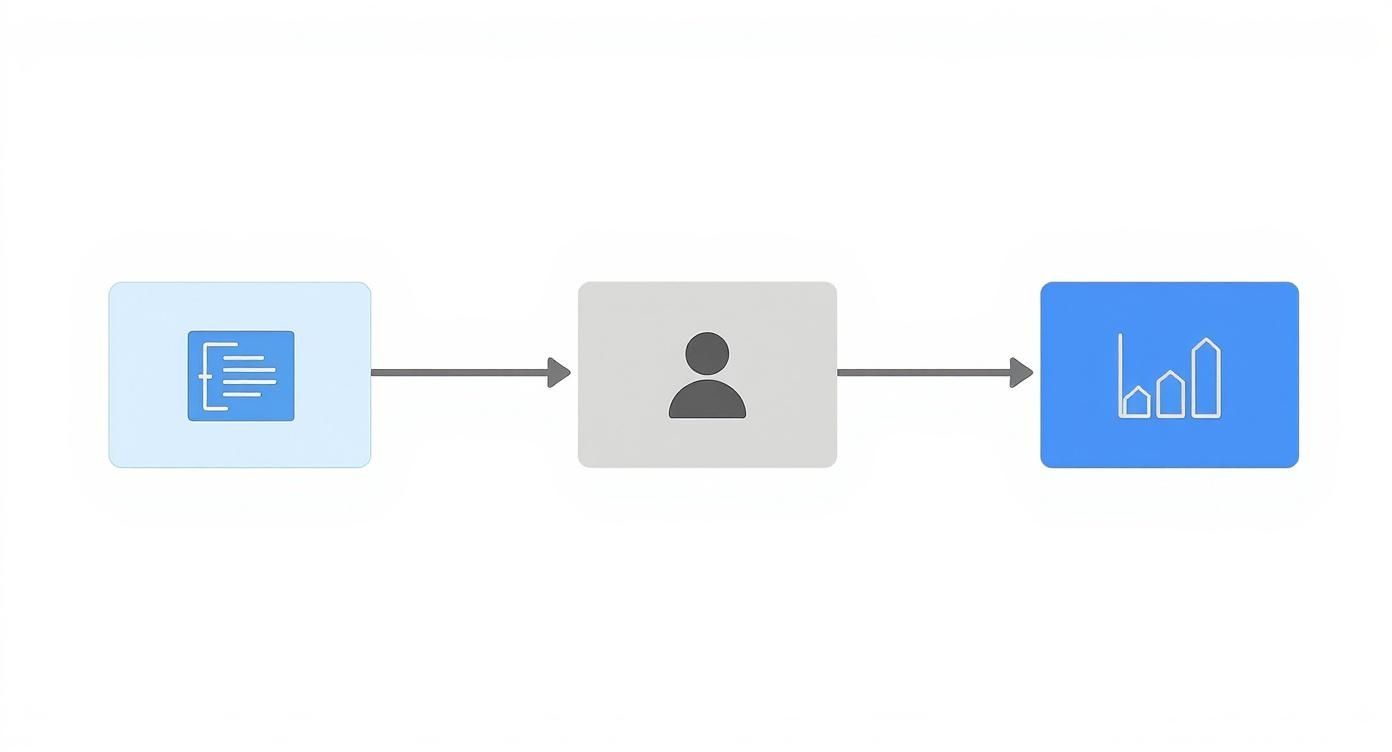

This simple flow chart gives you a bird's-eye view of the core stages of usability testing.

This visual breaks the process down into three essential phases—planning, testing, and reporting—that turn raw user feedback into concrete improvements for your platform.

Even with its proven value, it's surprising how many companies skip it. Data shows that only about 55% of companies are doing any kind of online usability testing. That’s a massive missed opportunity, especially when these tests can pinpoint up to 85% of usability problems before they ever affect your audience.

A usability issue in the checkout flow of a Sitecore Commerce site isn't just a design flaw; it's lost revenue. A confusing document library in a SharePoint portal isn't just an inconvenience; it's a direct hit to productivity.

Ultimately, the goal is to build a seamless journey that feels completely natural to the user. By finding and fixing friction points, you're directly contributing to a better digital experience. To dive deeper, take a look at our guide on how to improve customer experience with a user-focused mindset. When you invest in usability, you're investing in your users' success—which is what fuels your own.

Building Your Strategic Testing Blueprint

A successful usability test is won long before the first participant clicks a button. The real work happens upfront, in crafting a strategic blueprint—a detailed plan that turns vague intentions into a clear path for gathering actionable insights. Without a solid plan, you're just collecting opinions. With one, you're conducting research that actually drives business results.

This is where you shift from a generic goal like "improve the website" to something specific and measurable. If you're serious about following user experience design best practices, this level of clarity isn't just nice to have; it's non-negotiable.

Think about it in a real-world context. For a Sitecore Commerce implementation, a vague goal is useless. A powerful, strategic objective sounds like this: "Reduce cart abandonment by 15% by identifying and removing friction points in the three-step checkout flow."

Or, if you're working with a SharePoint intranet, "make it easier to find documents" just won't cut it. A much better goal is: "Decrease the time it takes for new hires to find and download all required onboarding documents by 30%." See the difference?

Crafting Realistic User Scenarios

Once your objective is locked in, you need to create realistic user scenarios. These aren't just simple instructions; they're short stories that give participants the context and motivation they need to perform tasks. They should feel like something a real person would actually do on your platform, whether it's Sitecore or SharePoint.

Good scenarios put the user in a believable situation, giving them a genuine reason to complete the task. This is how you uncover natural behaviors and frustrations.

Here’s how that looks in practice:

Platform: Sitecore XP

Scenario Context: "Imagine you're a returning customer who recently looked at a product on our site. You just got a personalized email with a discount for that very item. Your goal is to find it again, add it to your cart, and use the promo code from the email."

Task: Locate the product, add it to the cart, and successfully apply the discount code 'SAVE20' at checkout.

Platform: SharePoint Online

Scenario Context: "You're a project manager kicking off a new marketing campaign. You need to grab the approved brand assets and the latest project timeline from the company intranet."

Task: Navigate to the Marketing department's site, find the 'Brand Assets' library, download the company logo, and then find the 'Q4 Campaign Timeline' document.

The difference between a task and a scenario is motivation. A task says 'do this,' while a scenario says 'you need this because...' This small shift in framing is crucial for eliciting authentic user behavior.

Recruiting Your Ideal Participants

Your test is only as good as your participants. This might be the most critical part of your blueprint: finding users who genuinely represent your target audience. Testing with the wrong people will give you clean data that leads to terrible decisions.

For a Sitecore-powered customer portal, you need actual customers who match your key personas. For a SharePoint intranet, that means grabbing employees from different departments with a mix of technical skills.

Participant Sourcing Strategies

- Tap into Existing User Bases: This is the low-hanging fruit. For SharePoint, your employees are a built-in recruitment pool. For Sitecore, you can leverage customer data from Sitecore CDP to segment and reach out to active customers.

- Use Professional Recruiting Services: When you need to get hyper-specific, agencies can find participants who meet very narrow demographic and psychographic criteria. This is perfect for testing niche user journeys.

- Try Website Pop-ups or Banners: A simple, non-intrusive banner on your Sitecore site, perhaps configured through Sitecore Personalize, can invite real users to participate in exchange for a small incentive.

A well-crafted testing blueprint ensures every move you make is deliberate and focused. It ties your efforts directly to tangible business goals, making the insights you gather not just interesting, but truly impactful. Mapping out these initial scenarios is also a great starting point for seeing the bigger picture of a user's entire path; you can expand on this by exploring our customer journey mapping template.

Choosing Your Testing Tools and Methods

With a solid plan in place, it's time to pick the right tools and methods for your usability test. This isn't about finding a single "best" way to do things but rather about building a versatile toolkit. The goal is to match your approach to the specific questions you need to answer about your platform, whether that’s Sitecore or SharePoint.

The right choice depends entirely on what you're trying to achieve. Are you digging into why users get stuck on a complex, personalized Sitecore journey? Or do you just need to quickly validate if a new SharePoint document library is intuitive for a big group of employees? Each question demands a different approach.

Moderated vs. Unmoderated Testing

Your first big decision is whether to run a moderated or unmoderated test. A moderated test is a live, guided session where a facilitator observes a participant in real-time. This method is priceless for digging into the nuances of complex user behaviors and motivations.

For instance, if you're testing a highly personalized customer portal on Sitecore XP, a moderated session lets you ask follow-up questions like, "What did you expect to see when you clicked that personalized banner?" That direct interaction provides rich, qualitative insights you just can't get any other way.

On the other hand, unmoderated testing lets participants complete tasks on their own, without a facilitator watching live. Typically, their screen and voice are recorded by a software platform. This method is fantastic for gathering quantitative data and validating specific design choices quickly and at scale.

A perfect example is testing a new document library in SharePoint. You could ask 50 employees to find and download a specific file, then measure the success rate and average time on task. It’s an efficient way to get a broad view of usability for more straightforward tasks.

Moderated testing answers the 'why' behind user actions, offering deep qualitative insights. Unmoderated testing answers 'what' users do and 'how many' succeed, providing valuable quantitative data.

Beyond direct tests, you can also gather incredible qualitative data when you learn how to conduct effective user interviews. These interviews often happen before or alongside usability testing to get a deeper understanding of user needs.

Remote vs. In-Person Sessions

Next, you need to decide on the testing environment: remote or in-person. Remote usability testing allows people to participate from their own home or office, using their own devices. It’s usually more cost-effective, faster to set up, and gives you access to a much wider pool of participants from different geographical locations.

In-person testing, however, brings the participant into a controlled lab environment. While it’s definitely more resource-intensive, it gives you a front-row seat to observe subtle cues like body language and moments of frustration that are easy to miss on a video call. This can be especially useful when you're testing complex workflows on specialized enterprise systems.

Selecting the Right Tools for the Job

The tools you choose will ultimately bring your testing plan to life. The global market for usability testing tools is set to explode from $1.51 billion in 2024 to $10.41 billion by 2034, which just goes to show how critical UX is becoming for gaining a competitive edge.

Your toolkit should be a mix of platforms that help you capture both the "what" (quantitative data) and the "why" (qualitative data).

Essential Tool Categories:

- Session Recording & Heatmaps: Tools like Hotjar or Crazy Egg show you exactly where users click, how far they scroll, and how they move their mouse. This is phenomenal for visualizing user behavior on a Sitecore landing page to see if your calls-to-action are even being noticed.

- All-in-One Testing Platforms: Services such as UserTesting or Maze let you run both moderated and unmoderated tests, recruit participants, and analyze the results all in one place. They really streamline the entire process.

- Survey and Feedback Tools: Sometimes, you just need to ask a direct question. Platforms like SurveyMonkey or Typeform are perfect for gathering post-task feedback on things like user satisfaction or perceived ease of use.

- AI-Powered Analytics: Modern platforms are increasingly using AI to analyze user behavior in real time. To see how this is changing the game, check out our article on how AI analyzes user behavior in real time.

By blending these different methods and tools, you can build a truly comprehensive picture of your user experience. This hybrid approach ensures you capture not only what users are doing but, more importantly, why they are doing it.

Running a Smooth and Insightful Test Session

All your careful planning comes down to this: the test session. This is where the rubber meets the road, and your ability to run a smooth, unbiased session will make or break the quality of the feedback you get. The real goal here is to create an environment where participants feel comfortable enough to share their unfiltered thoughts, turning simple observation into a goldmine of insights.

Running a great session is part art, part science. The science is in sticking to your script and capturing data consistently. The art? That lies in building instant rapport and guiding the user without actually leading them. This delicate balance is especially critical when you're testing a complex platform like a Sitecore or SharePoint implementation.

Mastering the Art of Moderation

The moderator’s job is so much more than just reading instructions from a script. You’re the user's guide, confidant, and chief observer, all rolled into one. Your first and most important task is to make the participant feel at ease from the moment they walk in.

Kick things off by explaining that there are no right or wrong answers—you're testing the platform, not them. This simple statement is incredibly effective at relieving performance anxiety and encouraging total honesty. From there, your most powerful tool is the "think-aloud" protocol. Gently encourage users to say whatever comes to mind—their thoughts, expectations, and frustrations—as they work through the tasks.

Asking open-ended, non-leading questions is just as crucial. Instead of asking, "Was that button confusing?" (which plants the idea that there's a problem), try something like, "Talk me through what you were thinking as you looked for a way to finish that step." This subtle shift invites a story, not just a yes or no.

Capturing Data Systematically

While the participant is busy with their tasks, your job is to capture every critical piece of data. This isn't about scribbling a few notes; it's about using a structured approach to ensure your findings are consistent and reliable across every single session.

Screen and audio recording are non-negotiable. They create an objective record of the user's journey, capturing all the hesitations, wrong clicks, and sighs of frustration that your notes might miss. These video clips become powerful, undeniable proof when you're presenting findings to stakeholders later.

Beyond just recording, you need a solid note-taking strategy. One of the most effective frameworks I've come across, especially for agile projects, is the RITE method (Rapid Iterative Testing and Evaluation).

- How RITE Works: Instead of waiting until all tests are done, the team observes a session, spots a clear usability issue with an obvious fix, and implements the change before the next session starts. This lets you validate solutions almost instantly.

- Sitecore Example: A user struggles to find the "Request a Demo" CTA on a product page. The team sees this, agrees the button is buried, and a developer moves it to a more prominent spot before the next participant arrives.

- SharePoint Example: An employee can't figure out how to upload a document to a specific library. The team quickly adds a clearer label or a "New Document" button for the next user to try.

The RITE method transforms usability testing from a simple diagnostic tool into a real-time problem-solving engine. It’s all about finding and fixing issues with incredible speed.

Measuring Success with Key Metrics

To back up your qualitative observations, you absolutely need to capture quantitative data. These hard numbers help you track improvements over time and show leadership just how severe an issue really is.

Here are a few essential metrics to track:

- Task Success Rate: The most fundamental metric. Did the user complete the task? You can track this as a simple binary (Yes/No) or on a graded scale (e.g., Completed with ease, Completed with difficulty, Failed).

- Time on Task: How long did it take them? A task that takes way longer than expected points to friction, even if the user eventually succeeded.

- Error Rate: How many mistakes did the user make while trying to complete a task? This helps you quantify just how difficult or confusing an interface is.

After the session, always use a standardized questionnaire to measure user satisfaction. The System Usability Scale (SUS) is a trusted, ten-question survey that gives you a reliable score for your platform's perceived usability. It’s quick for users to fill out and provides a single, comparable score you can benchmark against.

Turning Raw Data into Actionable Insights

Capturing data during a usability test is only half the battle. You’ve got session recordings, stacks of notes, and user feedback—but raw observations are useless until you give them meaning. This is the part where you transform all that data into a clear, compelling story that gets stakeholders on board and drives real change.

This is where you connect the dots between a user’s hesitation and a direct hit to the business's bottom line.

The goal isn't just to list every problem you found. It’s about synthesizing those findings, spotting the patterns, and building a narrative that resonates with everyone—from developers working on your Sitecore site to executives overseeing your SharePoint strategy. A well-told story, backed by solid evidence, is what turns insights into action.

Synthesizing Findings with Affinity Mapping

After your test sessions, you’re left with a mountain of qualitative data. User quotes, your own observations, facilitator notes—it’s a lot to process. The best way I’ve found to make sense of it all is through affinity mapping. It's a simple, collaborative technique that helps your team visually cluster individual data points into meaningful groups.

Think of each observation as a single sticky note. For a Sitecore Commerce test, you might jot down things like:

- "User couldn't find the guest checkout option."

- "Participant seemed confused about shipping costs."

- "User said, 'I wish I could see my loyalty points here.'"

Get these notes up on a whiteboard or a digital canvas. As you start grouping them, clear patterns will emerge. The notes about guest checkout and shipping costs might come together under a theme you label "Checkout Friction." The loyalty points comment could fall into a group called "Lack of Personalization Cues." Just like that, scattered feedback becomes a structured overview of the biggest pain points.

Prioritizing Issues for Maximum Impact

You can’t fix everything at once. Trying to is a surefire way to overwhelm your team and get nothing meaningful done. The next step is to prioritize problems based on how severe they are and how often they occurred, linking each one directly back to a business outcome.

A simple but incredibly effective framework for this uses two axes:

- Frequency: How many users ran into this issue? A problem that tripped up one out of five users is less urgent than one that stopped all five.

- Severity: How badly did this issue derail the user? A typo is a low-severity issue, but a broken "Add to Cart" button is a critical blocker that kills the entire journey.

Your highest-priority items are those that are high-frequency and high-severity. These are the "showstoppers" that are almost certainly costing you revenue or productivity. They need to be fixed, and fast.

For instance, if 80% of your testers on a SharePoint intranet failed to find the new HR policy library (high frequency) and gave up (high severity), that issue goes straight to the top of your list.

Crafting a Compelling Usability Report

Your findings need to land with impact. A dense, 50-page document will be skimmed at best, ignored at worst. Your usability report should be a visual, data-driven story that makes the user's struggle undeniable.

Here’s a structure that works well:

- Executive Summary: Start with the big picture. Briefly cover the test goals, who you tested with, and a bulleted list of the top 3-5 critical findings and your recommendations.

- Key Findings: Dedicate a slide or section to each major theme you identified. For each one, include a clear problem statement, the evidence to back it up, and a specific, actionable recommendation.

- Powerful Evidence: Don't just tell stakeholders what happened—show them. Embed short video clips of users getting stuck. Pull out powerful, direct quotes. A clip of a user sighing, "I'm so lost, I'm just going to give up," is more impactful than any statistic you can share.

- Prioritized Recommendations: Wrap up with a clear, prioritized list of what to fix next. This gives the team a concrete roadmap for action.

This structured approach is crucial for any platform, but it’s also a key part of a much larger review process. You can dig deeper by exploring our detailed user experience audit checklist.

Presenting to Stakeholders and Securing Buy-In

How you present your findings is just as important as the findings themselves. Your goal is to build empathy and create a shared sense of urgency. Remember to tailor your presentation to your audience. Developers will want the technical nitty-gritty, while executives need to see the business impact.

The broader tech community has long recognized the value of robust testing. For example, over 31,854 companies have adopted Selenium, a popular open-source automation tool. While it's often used for QA, its ecosystem can be integrated into usability workflows, especially with session replay tools. This reflects a global trend toward scalable testing, and you can discover more insights about testing tool adoption to see how deep this commitment runs.

When presenting, always frame problems in terms of business metrics. Instead of saying, "Users found the navigation confusing," try this: "Confusion in the main navigation led to a 75% task failure rate on finding our key services, which directly impacts lead generation." This language connects user frustration to the bottom line, making it impossible for anyone to ignore.

Common Usability Testing Questions Answered

Even with a solid plan, a few questions always pop up when you're in the trenches of usability testing. Getting straight answers is key to feeling confident in your process, especially when you're dealing with complex platforms like Sitecore or SharePoint.

Let's tackle some of the most frequent questions we hear and cut through the noise with practical advice.

How Many Participants Do I Really Need for a Test?

You’ve probably heard the magic number: five users. This isn't just a random guess; it comes from research showing that a small group can uncover about 85% of the usability problems on a website. It’s a fantastic starting point for most projects.

But the real answer is, "it depends." The complexity of your platform and the diversity of your audience matter.

For a big, multifaceted Sitecore XP site with different journeys for various customer types, you’re better off running separate, small tests of 3-5 users for each key persona. This approach ensures you’re actually testing the unique paths and personalization features that matter to each group.

If you’re testing a SharePoint intranet, grabbing 5-8 employees from different departments will give you much broader coverage. This accounts for the different roles, permissions, and tech-savviness you'll find across an organization.

The true power isn't in one massive test, but in iteration. It is far more effective to run three separate, small tests of five users—and make improvements between each round—than to conduct a single large test with fifteen participants all at once.

What's the Difference Between Usability Testing and A/B Testing?

People often mix these two up, but they answer fundamentally different questions. Think of them as complementary tools in your optimization kit—one tells you why something is happening, and the other tells you what is happening.

Usability Testing is Qualitative: Its goal is to get to the "why." You're observing a handful of real people as they interact with your Sitecore site. You watch where they struggle, listen to their thought process out loud, and uncover the sources of their confusion.

A/B Testing is Quantitative: This is all about measuring the "what" at scale. In Sitecore, this is a native feature. You can easily pit two versions of a page against each other (Version A vs. Version B) with live traffic to see which one performs better against a specific goal, like getting more sign-ups or clicks.

Here’s a great workflow: start with usability testing to find problems and generate hypotheses for how to fix them. Then, use Sitecore’s built-in A/B testing capabilities to validate which of your proposed solutions actually works best with a much larger audience.

How Can I Conduct Usability Testing on a Tight Budget?

You don't need a huge budget to get huge insights. High-impact usability testing can be surprisingly affordable if you get a little creative.

One of the easiest ways to start is with "guerilla testing." Seriously, just go to a coffee shop and offer to buy someone a latte if they'll spend five minutes performing a few quick tasks on your site. For an internal platform like a SharePoint intranet, your colleagues are your built-in test group—the only real cost is their time.

There are also plenty of free and low-cost tools for screen recording, running unmoderated tests, and sending out quick surveys after a session. The most important thing is just to start. Even watching two or three people who've never seen your project before will reveal critical issues that your team has become completely blind to.

At Kogifi, we build these principles into every digital experience we create. Our expertise in Sitecore and SharePoint ensures that your platform is not only powerful but also perfectly aligned with your users' needs. Learn more about how our tailored DXP solutions can elevate your business.